Hi! I am Ruilin Luo. I am currently a third-year master’s student in the Intelligent Interaction Group (IIGroup) at Tsinghua University, supervised by Prof. Yujiu Yang. I received my bachelor’s degree from Huazhong University of Science and Technology, supervised by Prof. Kun He. Prior to that, I spent three happy years of high school at NO.1 Middle School Affiliated to Central China Normal University.

My research interests lie in LLM/MLLM Reasoning and Graph Representation Learning.

🎓 Education

- Sept. 2023 - Present, Master’s Degree in Electronic and Information Engineering, National Scholarship for Graduate Students(2025)

- Sept. 2019 - June 2023, Bachelor’s Degree in Computer Science and Technology, GPA 3.96/4.00.

🎆 News

- Jan. 2026: AVAR has been accepted by ICLR 2026. See you in Rio de Janeiro, Brazil.

- Nov. 2025: Qwen3-VL technical report is released.

- Sept. 2025: Qwen3-VL is released! Try stronger reasoning on Qwen Chat.

- Sept. 2025: URSA has been accepted by NeurIPS 2025. See you in San Diego! A nice research internship at ByteDance. Congrats to Zhuofan.

- Aug. 2025: FairTAG has been accepted by EMNLP 2025. See you in Suzhou! The arXiv preprint and code are coming soon.

- May 2025: Two papers have been accepted to ICML 2025 and ACL 2025, respectively. Congrats to Zicheng and Tianle.

- Sept. 2024: PTD-SQL has been accepted by EMNLP 2024. See you in Miami. A nice research internship at Tencent.

- May 2024: UniBi has been accepted by ECML-PKDD 2024 as an oral paper. See you in Vilnius, Lithuania.

- Feb. 2024: PReSA has been accepted by COLING 2024. See you in Torino, Italy.

💻 Interships

- Mar. 2025 - Present, Research Intern, Multimodal Large Language Model Reasoning.

- Sept. 2024 - Mar. 2025, Research Intern, Multimodal Large Language Model Reasoning.

- Jan. 2024 - July 2024, Research Intern, Large Language Model Code Agent.

📝 Publications

Papers on Large Language Model:

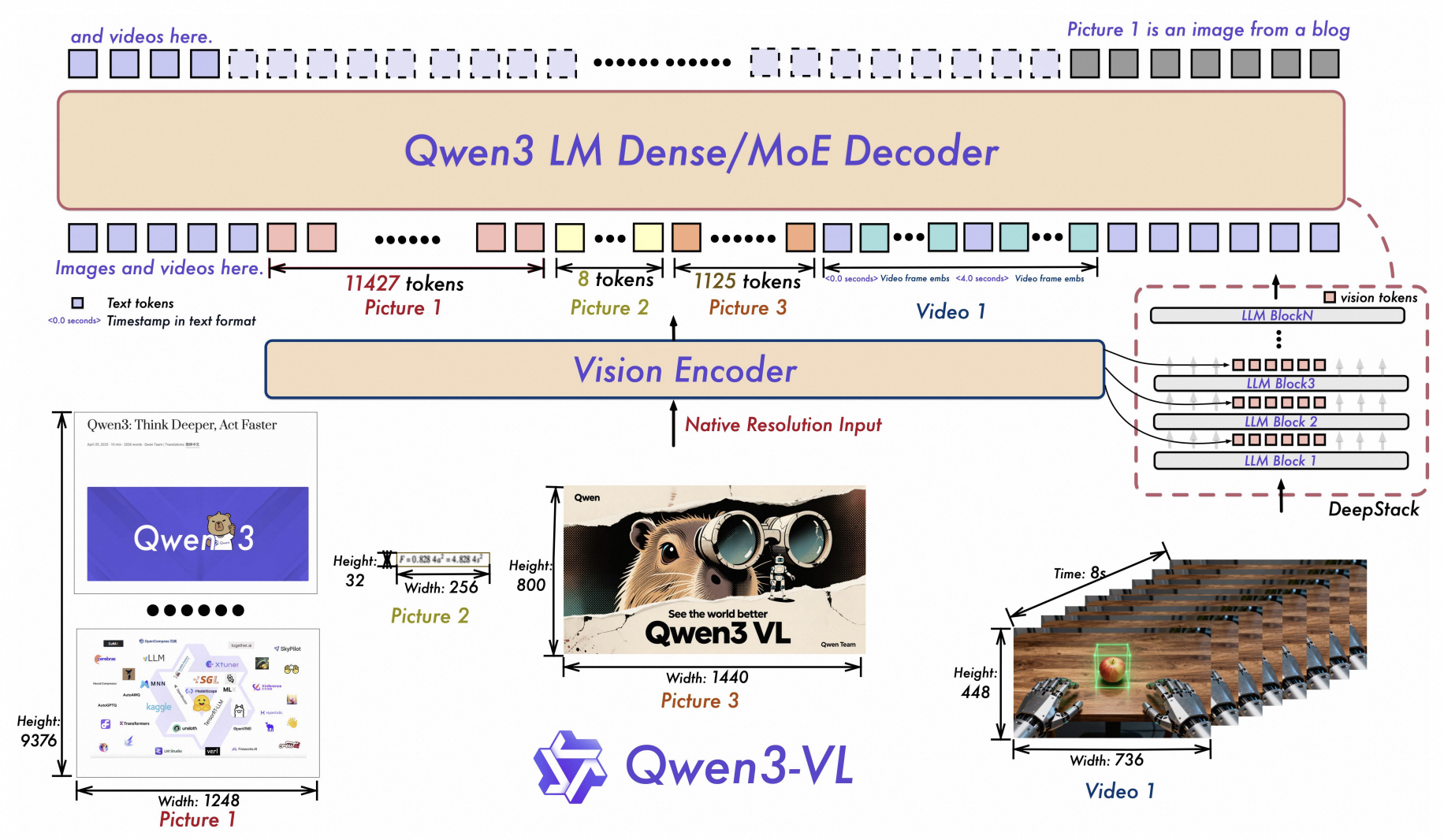

Qwen3-VL Technical Report

Core Contributors

[paper] | [code] | [HuggingFace]

- Qwen3-VL is the most powerful vision-language model in the Qwen series to date.

- I focus on enhanced multimodal reasoning: Excels in STEM/Math—causal analysis and logical, evidence-based answers.

- Specifically, I lead the innovation of data synthesis pipelines and training methodologies to systematically enhance perceptual and reasoning capabilities across all stages—from continual pretraining and supervised fine-tuning to reinforcement fine-tuning and reinforcement learning.

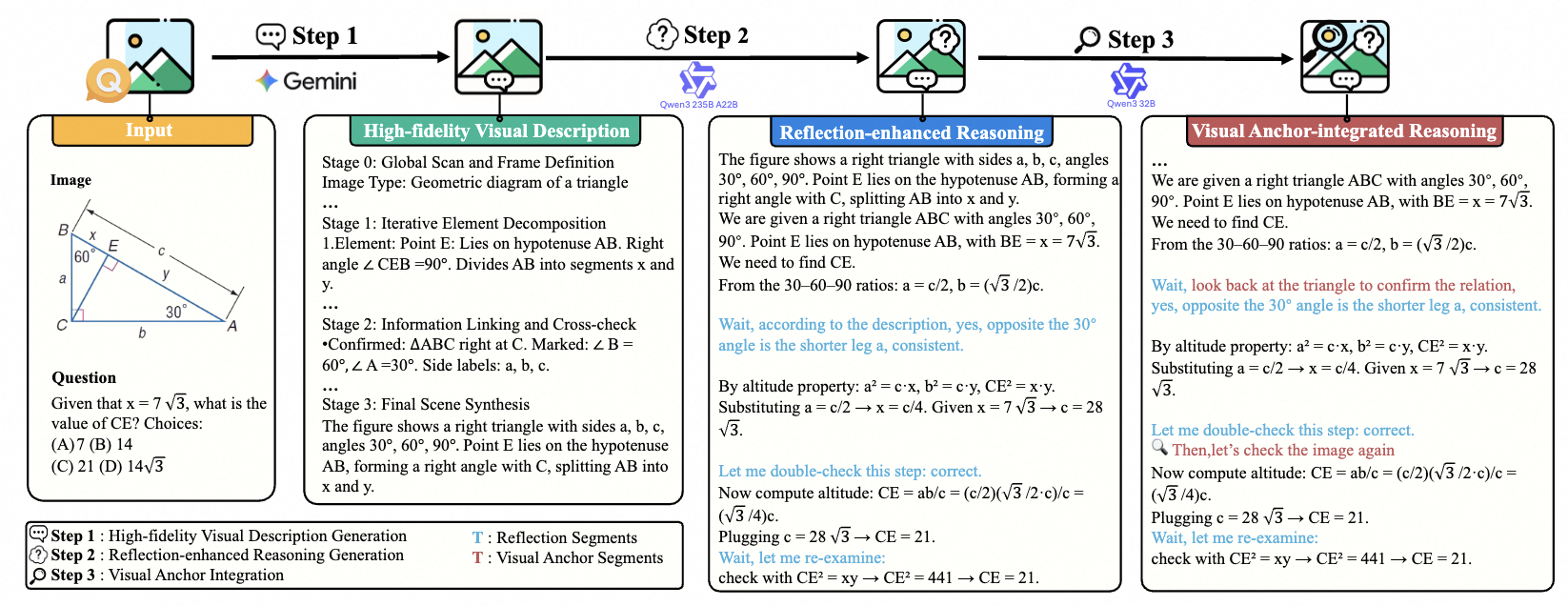

From Narrow to Panoramic Vision: Attention-Guided Cold-Start Reshapes Multimodal Reasoning

Ruilin Luo*, Chufan Shi*, Yizhen Zhang*, Cheng Yang, Songtao Jiang, Tongkun Guan, Ruizhe Chen, Ruihang Chu, Peng Wang, Mingkun Yang, Lei Wang, Yujiu Yang, Junyang Lin, Zhibo Yang

[paper] | [code] | [HuggingFace]

- We propose the VAS attention metric and find that the reasoning performance of Multimodal Large Reasoning Models (MLRM) is highly correlated with VAS.

- We introduce the AVAR training pipeline, which enhances the effectiveness of multimodal reasoning data training by scaling VAS through three stages: data construction, cold-start initialization, and reinforcement learning.

- Our model achieves state-of-the-art results on reasoning and perception benchmarks at the 7B scale.

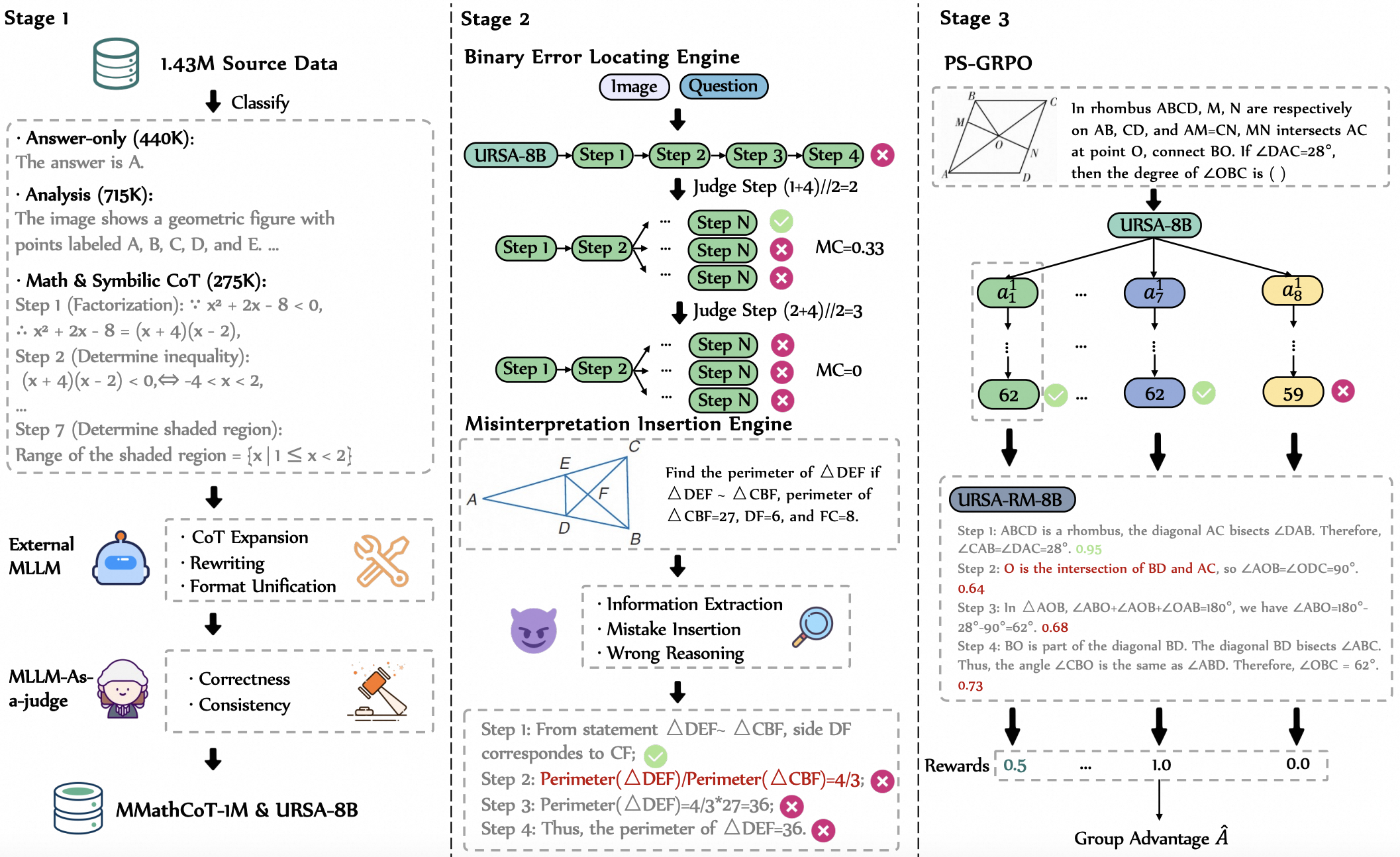

Unlocking Multimodal Mathematical Reasoning via Process Reward Model

Ruilin Luo*, Zhuofan Zheng*, Yifan Wang, Xinzhe Ni, Zicheng Lin, Songtao Jiang, Yiyao Yu, Chufan Shi, Ruihang Chu, Lei Wang, Jin zeng, Yujiu Yang

[Paper] | [Code] | [HuggingFace]

- We are the first to propose leveraging a process reward model (PRM) to provide process-level optimization in multimodal mathematical reasoning.

- We introduce a training framework addressing three key stages: reasoning data scarcity, reward data scaling, and online PRM-integrated reinforcement learning (RL).

- Our data is used to train Seed1.5-VL, and we open-source URSA-8B-PS-GRPO, a model whose reasoning capability matches that of the InternVL3 series.

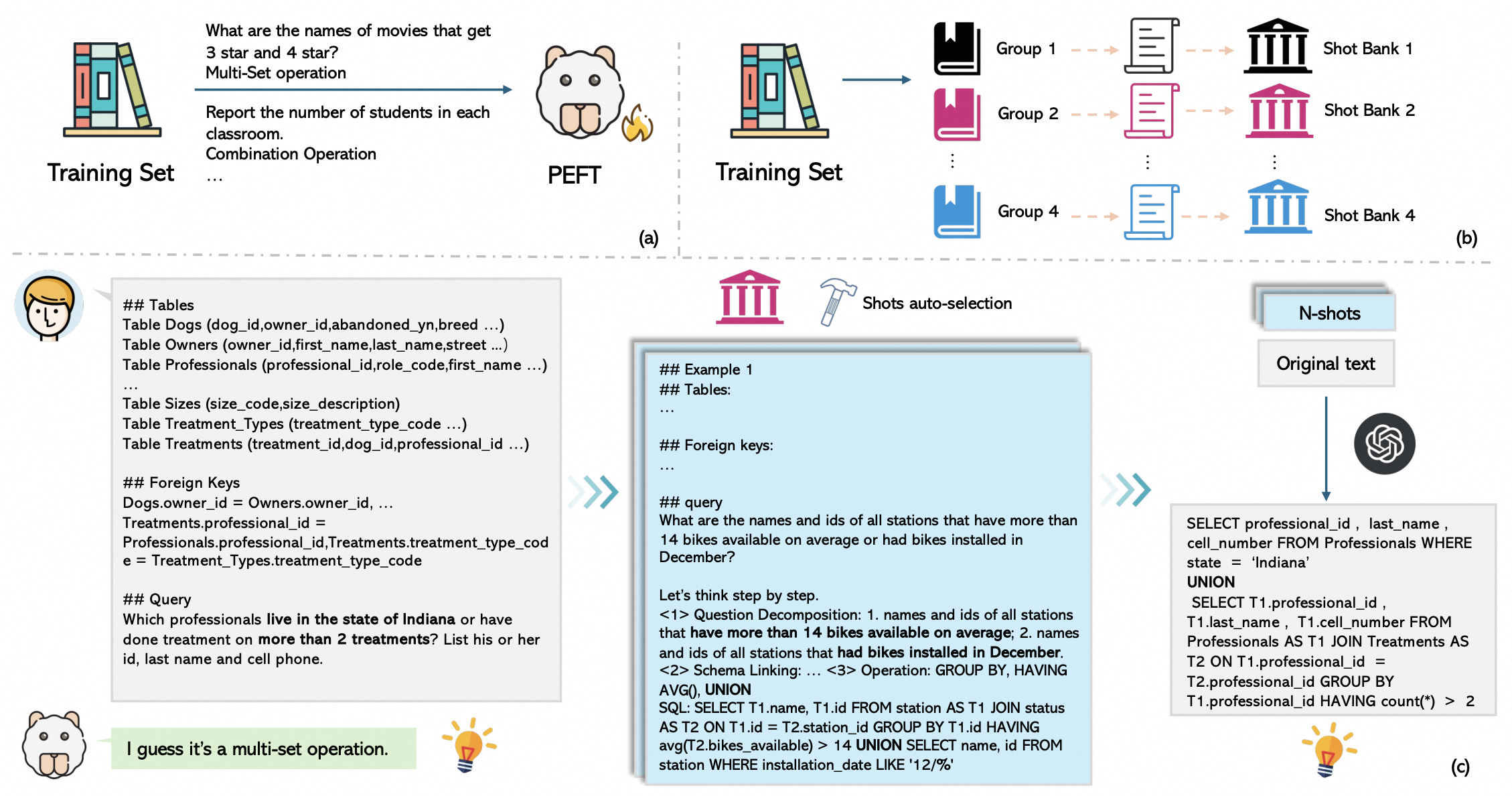

PTD-SQL: Partitioning and Targeted Drilling with LLMs in Text-to-SQL

Ruilin Luo, Liyuan Wang, Binghuai Lin, Zicheng Lin, Yujiu Yang

- We propose a code agent framework for the text-to-SQL task that leverages structured grammars for question-type classification, followed by question-type-specific automated question bank construction and a two-layer few-shot retrieval mechanism.

- We observe that stronger models achieve greater improvements on harder question types when provided with better-matched few-shot chain-of-thought (CoT) prompts, reflecting a learning pattern aligned with human cognition.

- Our method achieves state-of-the-art (SOTA) performance on benchmarks such as Spider and BIRD.

Papers on LLM for Graph Representation Learning:

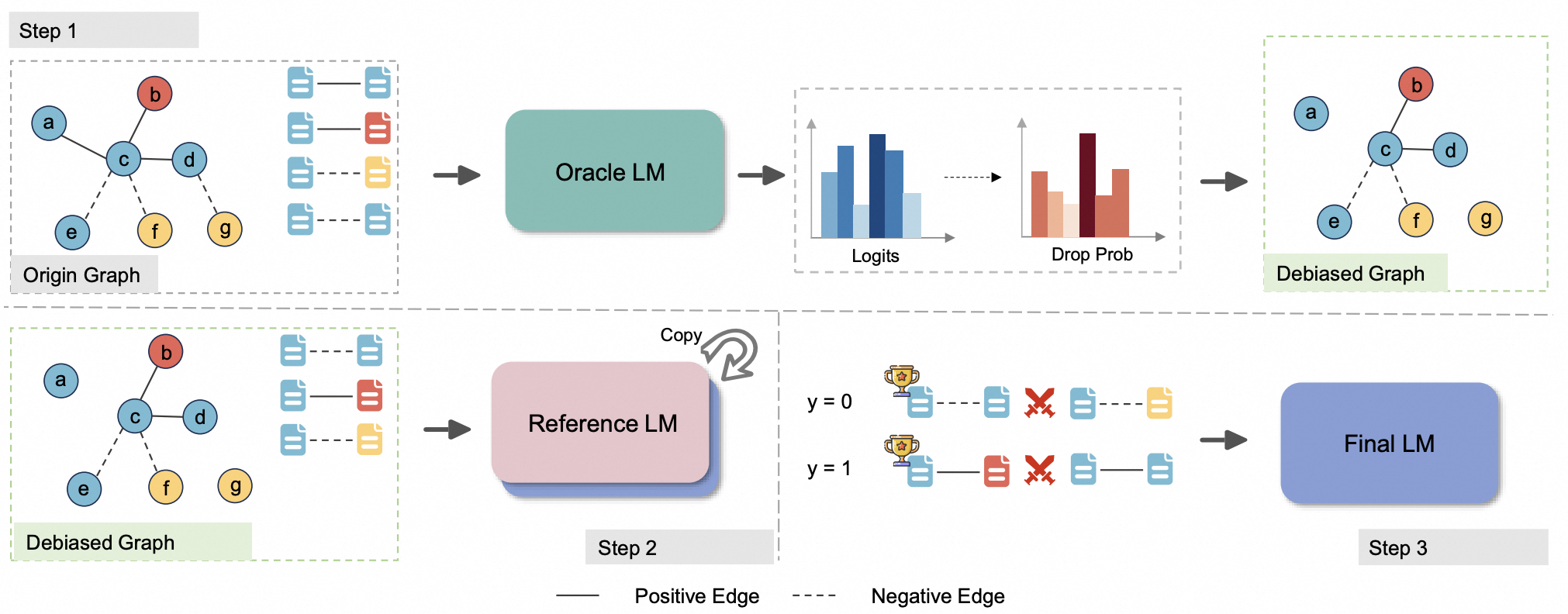

Fair Text-Attributed Graph Representation Learning

Ruilin Luo, Tianle Gu, Lin Wang, Yunfeng Zhou, Lei Wang, Yujiu Yang

- We are the first to identify the bias amplification effect between language model embeddings and GNNs in TAG representation learning.

- We propose solutions from the perspectives of fine-tuning and offline reinforcement learning.

- We provide a theoretical foundation for both the amplification of unfairness and our proposed mitigation approaches.

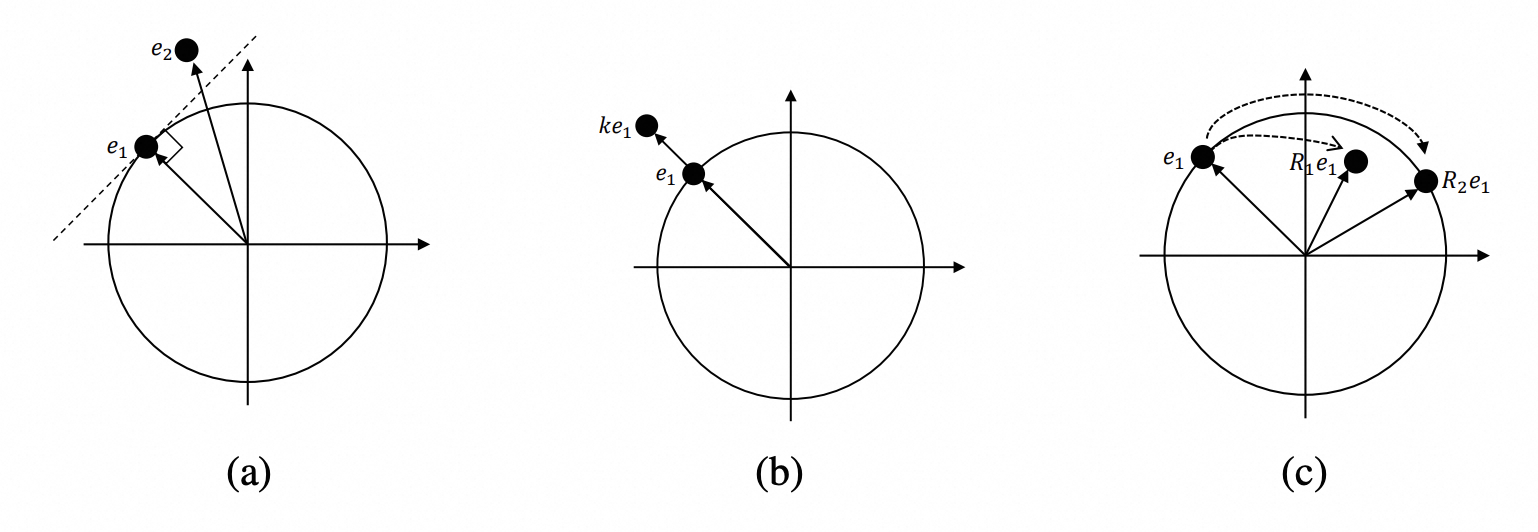

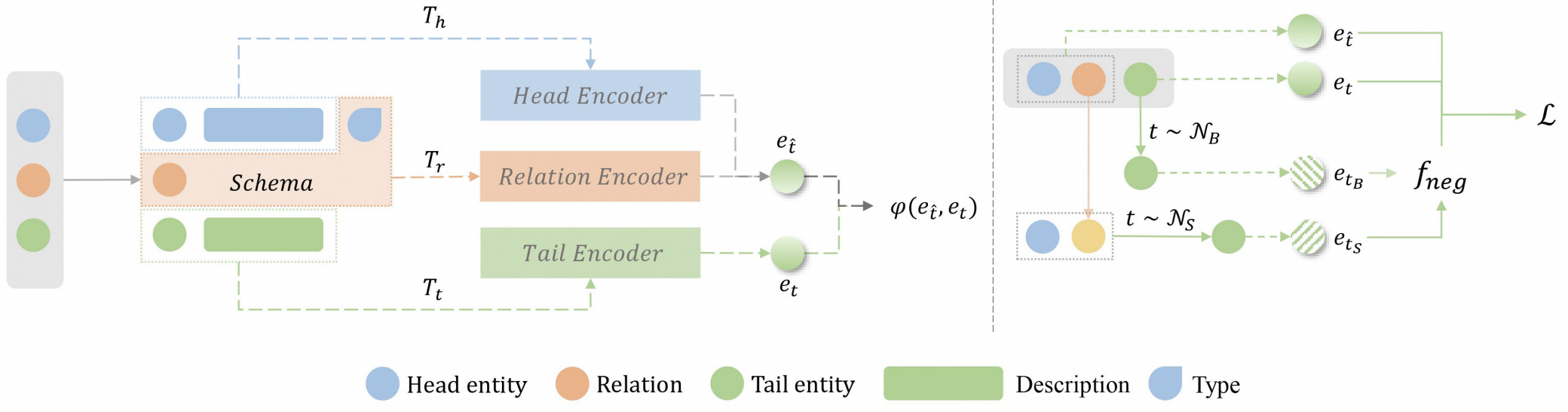

Prior Bilinear Based Models for Knowledge Graph Completion

Jiayi Li*, Ruilin Luo*, Jiaqi Sun, Jing Xiao, Yujiu Yang

- We propose the principle of identity in knowledge graph completion (KGC).

- We introduce a bilinear KGC method that explicitly models the law of identity and outperforms classical approaches such as RESCAL and ComplEx.

- We provide a theoretical derivation for modeling the law of identity.

🏅 Honors and Awards

- National Scholarship

- Tsinghua University Comprehensive First-Class Scholarship

- Huazhong University of Science and Technology Outstanding Student

🎵 Miscellaneous

- Favorite singers/groups: Wang Leehom, JJ Lin, Jacky Cheung, David Tao, Eric Chou, Taeyeon, (G)I-DLE, BIGBANG, IZ*ONE

- Hobbies: Cycling, fitness, concert-going, esports (DOTA 2, CS2), singing, badminton